███████ ██████

-

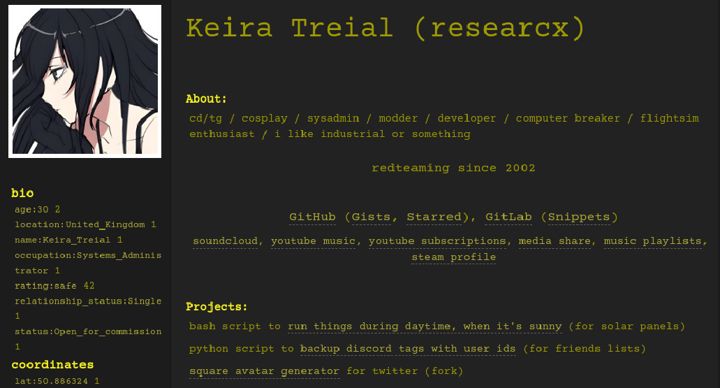

Blog, GitHub (Gists), GitLab (Snippets)

-

soundcloud, youtube music, music playlists, spotify, twitch, steam

sockdreams wishlist, amazon wishlist, sponsor me

-

I make real things without the use of generative networks. If you would like to commission me for code, software, penetration testing, security consultation, graphics design, web design, video editing, funny photoshop edits, cosplay requests; feel free to contact me here.

Don't need a commission but like what I do? You can support me with a gift or a donation!

Things that I've done:

Automator Workflows for macOS

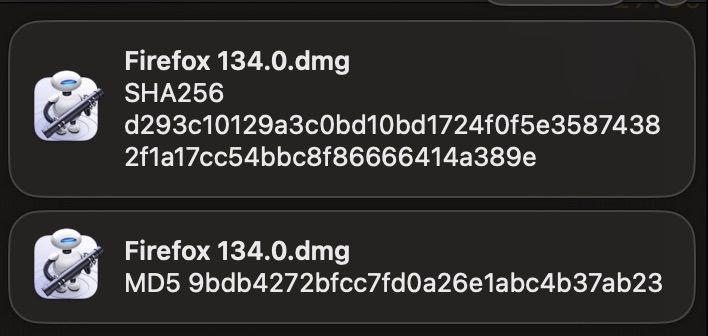

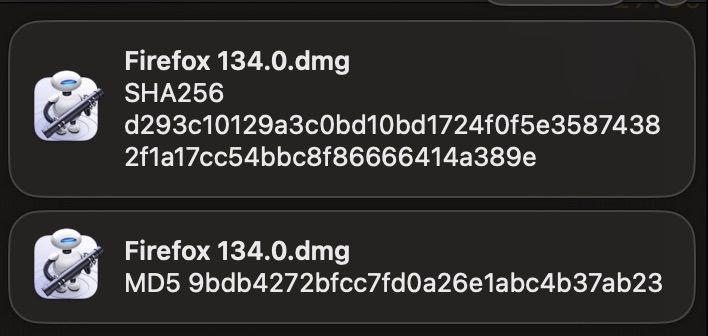

Display Checksum

Displays a MD5 and SHA-256 checksum for the selected file.

Available in "Quick Actions > Display Checksum" for all files.

Download

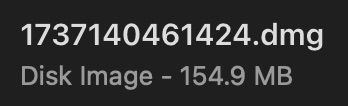

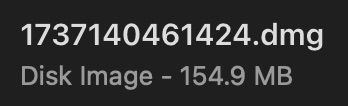

Auto Filename

Renames the selected file to <Current Unix Timestamp> + <3 Random Digits>, e.g. Firefox 134.0.dmg -> 1737140461424.dmg.

Available in "Quick Actions > Auto Filename" for all files.

Download

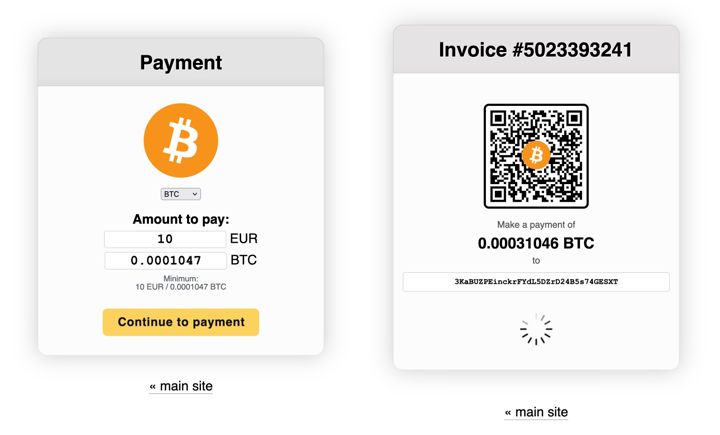

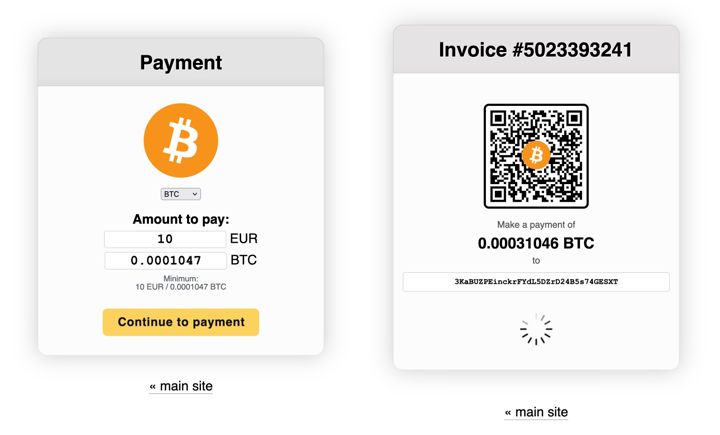

Cryptocurrency payment processor

A cryptocurrency donation & payment system.

Features:

- Works regardless of whether the user has JavaScript enabled.

- Generates a QR code for the payment address. (A new payment address is generated for each transaction.)

- Automatic currency conversion.

- Supports payment via a multitude of coins.

walletfind

Cryptocurrency Wallet Finder - Currently supports finding numerous wallet types, such as monero, bitcoin, ethereum, doge, blockchain, metamask, electrum. It will also look for possible mnemonic phrases saved in files.

import os, re, sys, subprocess

from datetime import datetime

start=datetime.now()

if len(sys.argv) < 2:

sys.exit("File path not specified. Exiting...")

path = sys.argv[1]

files_list = []

popen = subprocess.Popen(["find", path], stdout=subprocess.PIPE)

resultOfSubProcess, errorsOfSubProcess = popen.communicate()

files_list = resultOfSubProcess.decode().splitlines()

ignore_filetypes = [".png", ".png", ".png", ".bmp", ".gif", ".svg", ".mp3", ".m4a", ".wav", ".ogg", ".mp4", ".webm", ".mov", ".exe", ".dll", ".dmg", ".zip", ".tar", ".gz", ".tgz", ".rar", ".7z", ".html", ".css", ".php", ".js", ".map", ".less", ".py", ".pyc", ".yml", ".tmpl", ".sql", ".conf", ".sh"]

ignore_filenames = ["robots.txt", "thumbs.db", ".DS_Store", "node_modules", "__pycache__", "site-packages", ".npmignore", ".git"]

mnemonic_lenghts = [9, 13, 16, 18, 21, 22, 24, 25]

wallet_files = ["wallet.dat", "aes.json", "userkey.json", "dumpwallet.txt", "wallet-backup", "wallet-backup", "wallet.db", "change.json", "request.json", "legacy.json", "dump.txt", "privkeys.txt", "wallet-data.json", "main_dump.txt", "secondpass_dump.txt", "wallet.android", "wallet.desktop", "wallet-android-backup", "electrum", "identity.json", "wallet.aes", "metamask", "wallet.vault", "bitcoin", "bitcoinj", ".encrypted", "myetherwallet", "mnemonic", ".keys", "kdfparams"]

wallet_strings = ["keymeta!", ".multibit.", "bitcoin", "hd_account", "hdm_address", "pbkdf2_iterations", "bitcoin.main", "addr_history", "master_public_key", "wallet_type", "{\"data\":", "pendingNativeCurrency", "Block_hashes", "mintpool", "mainnet", "testnet", "ethereum", "Ethereum", "mnemonic", "kdfparams", "additional_seeds", "always_keep_local_backup", "BIP39", "BIP-39", "blockchain", "metamask", "electrum"]

for current_file in files_list:

if os.path.splitext(current_file)[1] not in ignore_filetypes and os.path.basename(current_file) not in ignore_filenames:

for wallet in wallet_files:

if wallet in current_file:

print("[POSSIBLE WALLET] [FILE] " + current_file)

is_file = os.path.isfile(current_file)

if is_file:

num_words = 0

try:

current_file_binary = open(current_file, 'rb')

current_file_binary_data = current_file_binary.read()

except:

pass

try:

words = current_file_binary_data.split()

num_words += len(words)

if num_words in mnemonic_lenghts:

print("[POSSIBLE MNEMONIC] " + str(num_words) + " WORDS found in " + current_file)

except:

pass

try:

for wallet_data in wallet_strings:

if re.search(wallet_data, str(current_file_binary_data), re.IGNORECASE):

print("[POSSIBLE WALLET] [DATA] \"" + wallet_data + "\" found in " + current_file)

except:

pass

try:

current_file_binary.close()

except:

pass

print("\nCompleted in " + str(datetime.now()-start))

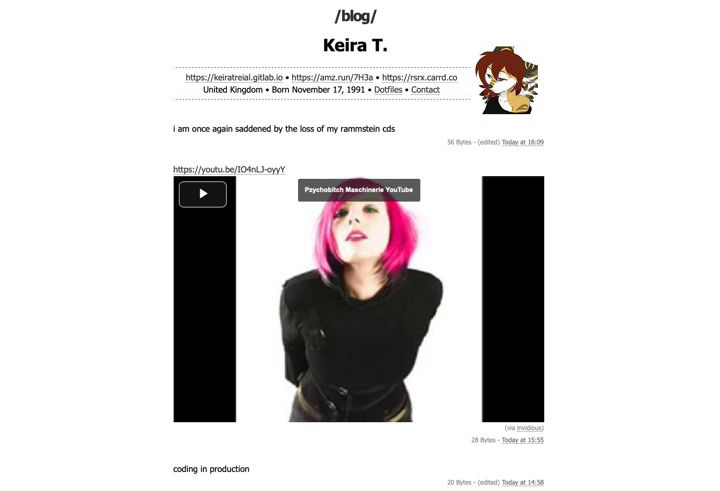

A script which parses Logseq journals into blog posts.

Features:

- uses invidio.us for youtube embeds (likely broken due to recent youtube changes)

- emoji to twemoji conversion (license: static/twemoji/LICENSE)

- highlightjs code syntax highlighting (license: static/highlightjs/LICENSE)

- thumbnail ceation and embeds (note, thumbnails are very compressed):

- external site embeds: youtube, soundcloud

- .mp4, .webm and .mov thumbnails + embeds

- .jpg, .jpeg, .jpe, .gif, .png and .bmp thumbnails + embeds

- supports darkmode browser extensions

- post file size displayed

- displaying of post creation and edit time

- user profile display

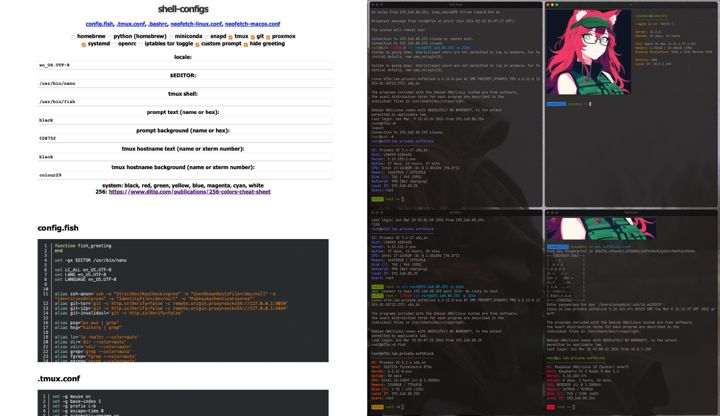

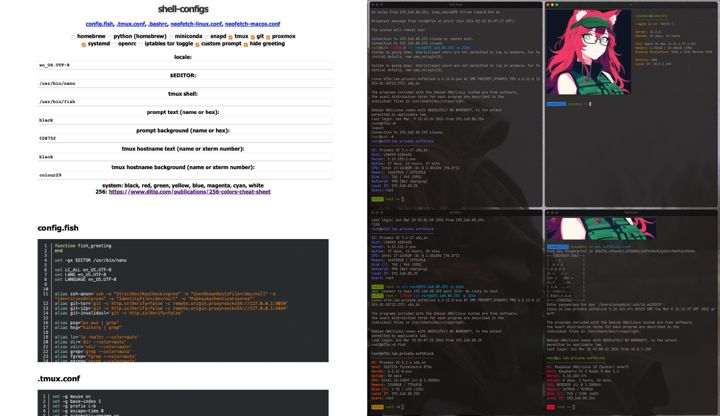

A javascript based shell config generator.

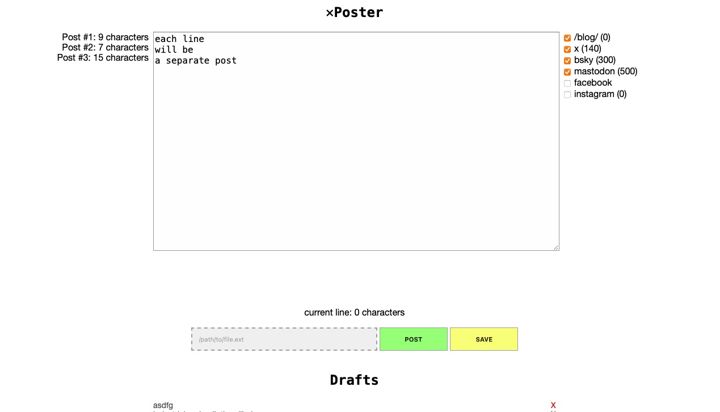

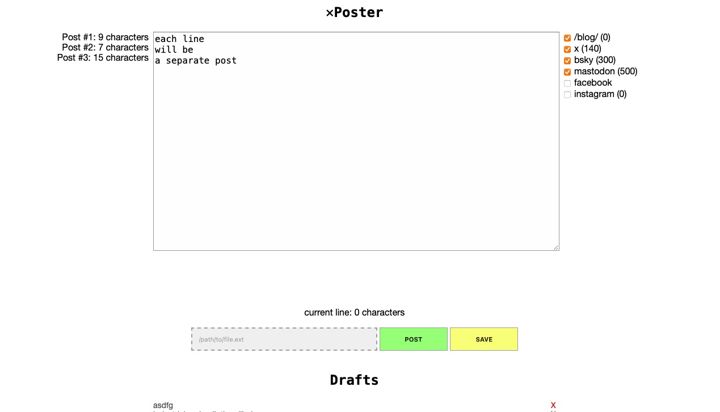

xPoster

Front-end for the do_social_media_post.py crossposter.

do_social_media_post

Social media crossposter.

import json, shlex, sys

from subprocess import Popen

caption = sys.argv[1]

image_caption = ""

if len(sys.argv) == 4:

image_caption = sys.argv[3]

social_media = sys.argv[2].split(',')

is_image = False

if caption.endswith(('jpg', 'png', 'gif', 'jpeg')):

is_image = True

for site in social_media:

if is_image == False:

if site != "instagram":

command = "/opt/homebrew/opt/python@3.9/libexec/bin/python3 /Users/admin/Documents/social_media_bots/"+site+"/posters/"+site+"_text.py " + json.dumps(caption)

print(command)

proc = Popen(shlex.split(command))

proc.communicate()

else:

print("instagram does not support text posting")

else:

command = "/opt/homebrew/opt/python@3.9/libexec/bin/python3 /Users/admin/Documents/social_media_bots/"+site+"/posters/"+site+"_image.py " + json.dumps(caption) + " " + json.dumps(image_caption)

print(command)

proc = Popen(shlex.split(command))

proc.communicate()

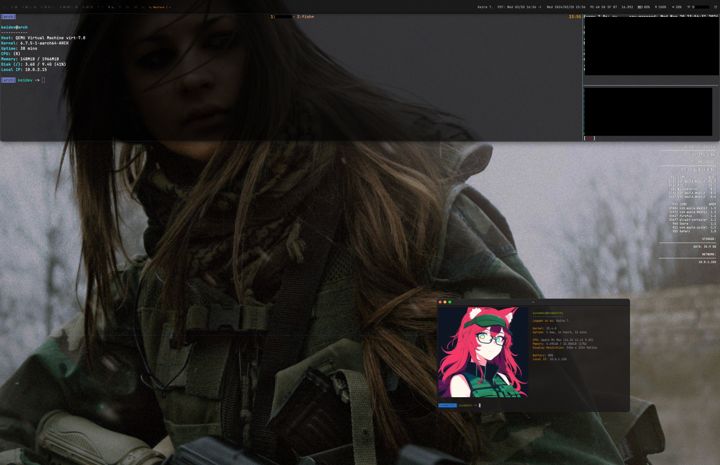

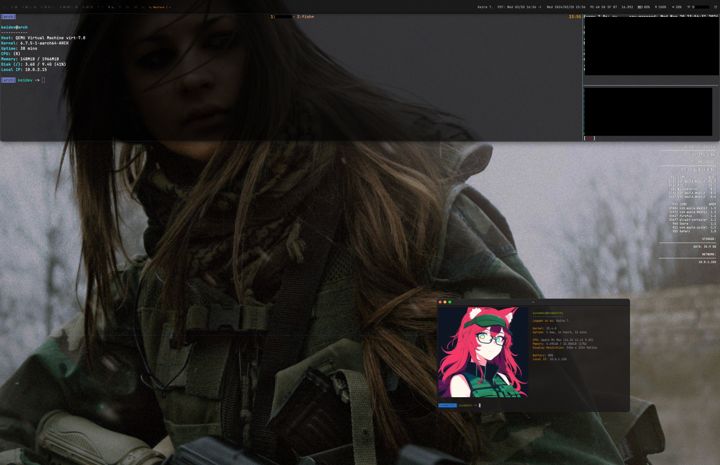

macOS 14.4 configuration

proxmox-backup

A script to backup Proxmox containers and host configs with the optional ability to automatically store them in LUKS-encrypted images. Also available for LXD.

#!/bin/bash -l

backups_folder="/Backups/Pi/$hostname"

path="/tmp/backup-$hostname/"

path_host=$path"host/"

path_containers="/var/lib/vz/dump"

mail=1

mailto="root"

make_encrypted=0

encryption_passphrase="passphrase"

path_crypt="luks/"

crypt_ext=".crypt"

days=7

run=$1

wait=120

timestamp=$(date +%Y%m%d_%H%M%S)

host_files=("/root/.bashrc" "/root/.ssh" "/root/.bash_profile" "/root/.bash_history" "/root/.tmux.conf" "/root/.local/share/fish" "/root/Scripts" "/etc/wireguard" "/etc/logrotate.d" "/etc/profile" "/etc/netdata" "/etc/fish" "/etc/fail2ban" "/etc/ssh" "/etc/sysctl.conf" "/etc/cron.d" "/etc/cron.daily" "/etc/cron.weekly" "/etc/cron.hourly" "/etc/cron.deny" "/etc/crontab" "/var/spool/cron" "/etc/sysconfig" "/etc/fstab" "/etc/crypttab" "/etc/postfix" "/etc/hosts" "/etc/resolv.conf" "/etc/aliases" "/etc/rsyslog.d" "/etc/ufw" "/etc/pam.d" "/etc/netplan" "/etc/wpa_supplicant" "/etc/network" "/etc/networks" "/etc/apt" "/etc/apticron" "/etc/yum.repos.d" "/etc/iptables.rules" "/etc/ip6tables.rules" "/etc/iptables" "/etc/modprobe.d" "/etc/pve" "/etc/udev" "/etc/modules-load.d" "/etc/systemd" "/etc/update-motd.d" "/etc/lightdm" "/etc/groups" "/etc/passwd" "/etc/nsswitch.conf" "/etc/netatalk" "/etc/samba" "/etc/avahi" "/etc/default" "/etc/nanorc" "/etc/X11" "/etc/netconfig")

core=("100" "101" "102" "103")

log_file=backup-$hostname-$timestamp.log

log=$path$log_file

rm -r $path

rm -r $path_containers

mkdir -p $path

mkdir -p $path_host

mkdir -p $path_containers

mkdir -p $backups_folder

if [[ "$make_encrypted" == 0 ]]; then

mkdir -p $path$path_crypt

fi

convertsecs() {

((h=${1}/3600))

((m=(${1}%3600)/60))

((s=${1}%60))

printf "%02d:%02d:%02d\n" $h $m $s

}

proxmox_backup() {

container=$1

vzdump $container

pct unlock $container

}

make_encrypted_container(){

name=$1

file=$2

mountpoint="$2/enc/"

size=$(du -s $file | awk '{print $1}')

if [ "$size" -lt "65536" ]; then

size=65536

else

size="$(($size + 65536))"

fi

crypt_filename=$hostname-$name-$timestamp$crypt_ext

crypt_mapper=$hostname-$name-$timestamp

crypt_devmapper="/dev/mapper/$crypt_mapper"

fallocate -l "$size"KB $path$path_crypt$crypt_filename

printf $encryption_passphrase | cryptsetup luksFormat $path$path_crypt$crypt_filename -

printf $encryption_passphrase | cryptsetup luksOpen $path$path_crypt$crypt_filename $crypt_mapper

mkfs -t ext4 $crypt_devmapper

mkdir -p $mountpoint

mount $crypt_devmapper $mountpoint

}

unmount_encrypted_container(){

name=$1

mountpoint="$2/enc/"

crypt_mapper=$hostname-$name-$timestamp

crypt_devmapper="/dev/mapper/$crypt_mapper"

umount $mountpoint

cryptsetup luksClose $crypt_mapper

}

if [[ "$run" == 1 ]]; then

if [[ "$make_encrypted" == 1 ]]; then

find $backups_folder -maxdepth 1 -name "*$crypt_ext" -type f -mtime +$days -print -delete >> $log

fi

find $backups_folder -maxdepth 1 -name "*.log" -type f -mtime +$days -print -delete >> $log

find $backups_folder -maxdepth 1 -name "*.tar" -type f -mtime +$days -print -delete >> $log

find $backups_folder -maxdepth 1 -name "*.zip" -type f -mtime +$days -print -delete >> $log

fi

START_TIME=$(date +%s)

echo "Backup:: Script start -- $timestamp" >> $log

echo "Backup:: Host: $hostname -- Date: $timestamp" >> $log

echo "Paths:: Host: $path" >> $log

echo "Paths:: Containers: $path_containers" >> $log

echo "Paths:: Backups: $backups_folder" >> $log

echo "Backup:: Backing up the following host files to $path_host" >> $log

for host_file in ${host_files[@]}; do

echo "Backup:: Starting backup of $host_file to $path_host" >> $log

host_file_safe=$(echo $host_file | sed 's|/|-|g')

if [[ "$run" == 1 ]]; then

zip -r $path_host$host_file_safe-$timestamp.zip "$host_file" >> $log

fi

done

echo "Backup:: Host files successfully backed up" >> $log

if [[ "$run" == 1 ]]; then

if [[ "$make_encrypted" == 1 ]]; then

echo "Backup:: Making an encrypted container for host files" >> $log

make_encrypted_container "host" $path_host

echo "Backup:: Moving files to encrypted container" >> $log

mv $path_host/*.zip "$path_host/enc/"

echo "Backup:: Unmounting encrypted container" >> $log

unmount_encrypted_container "host" $path_host

rm -rf $path_host/*

echo "Backup:: Successfully encrypted host backup" >> $log

fi

fi

echo "Backup:: Backing up containers" >> $log

for container in ${core[@]}; do

echo "Backup:: Starting backup on $container to $path_containers" >> $log

if [[ "$run" == 1 ]]; then

proxmox_backup $container >> $log

sleep $wait

fi

done

if [[ "$run" == 1 ]]; then

if [[ "$make_encrypted" == 1 ]]; then

echo "Backup:: Making an encrypted container for containers" >> $log

make_encrypted_container "containers" $path_containers

echo "Backup:: Moving files to encrypted container" >> $log

mv $path_containers/*.tar.gz "$path_containers/enc/"

echo "Backup:: Unmounting encrypted container" >> $log

unmount_encrypted_container "core" $path_containers

rm -rf $path_containers/*

echo "Backup:: Successfully encrypted core container backup" >> $log

fi

sleep $wait

fi

rsync -a --progress $log $backups_folder >> $log

if [[ "$make_encrypted" == 1 ]]; then

rsync -a --progress $path$path_crypt $backups_folder >> $log

else

rsync -a --progress $path_host $backups_folder >> $log

rsync -a --progress $path_containers/ $backups_folder >> $log

fi

END_TIME=$(date +%s)

elapsed_time=$(( $END_TIME - $START_TIME ))

echo "Backup :: Script End -- $(date +%Y%m%d_%H%M)" >> $log

echo "Elapsed Time :: $(convertsecs $elapsed_time) " >> $log

backup_size=`find $path -maxdepth 5 -type f -mmin -360 -exec du -ch {} + | grep total$ | awk '{print $1}'`

backup_stored=`find $path -maxdepth 5 -type f -exec du -ch {} + | grep total$ | awk '{print $1}'`

disk_remaining=`df -Ph $backups_folder | tail -1 | awk '{print $4}'`

echo -e "Subject: [$hostname] Backup Finished [$backup_size] [stored: $backup_stored | disk remaining: $disk_remaining] (took $(convertsecs $elapsed_time))\n\n$(cat $log)" > $log

if [[ "$mail" == 1 ]]; then

sendmail -v $mailto < $log

fi

sleep $wait

rm -r $path

rm -r $path_containers

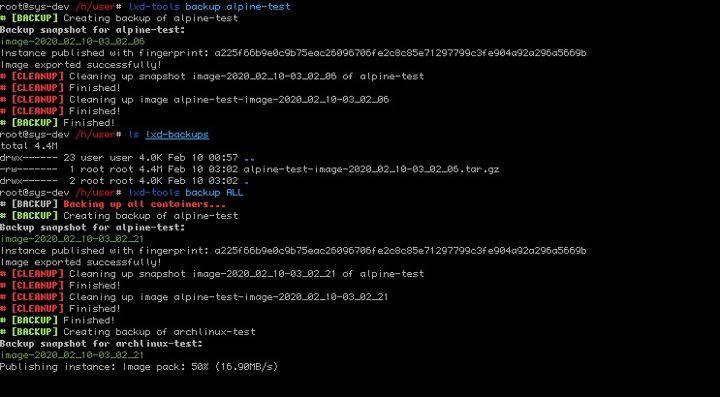

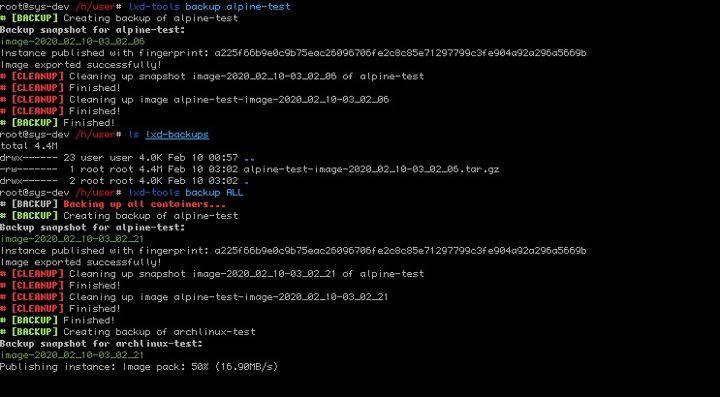

lxd-backup

#!/bin/bash

hostname=$(hostname)

lxc="/snap/bin/lxc"

path="/mount/backups/$hostname/"

path_host=$path"host/"

path_lxd=$path"lxd/"

path_lxd_core=$path"lxd/core/"

mail=1

mailto="root"

make_encrypted=1

encryption_passphrase="passphrase"

path_crypt="luks/"

crypt_ext=".encrypted"

days=7

run=1

wait=15

timestamp=$(date +%Y%m%d_%H%M%S)

/bin/mkdir -p $path

/bin/mkdir -p $path_host

/bin/mkdir -p $path_lxd

/bin/mkdir -p $path_lxd_core

if [[ "$make_encrypted" == 1 ]]; then

/bin/mkdir -p $path$path_crypt

fi

convertsecs() {

((h=${1}/3600))

((m=(${1}%3600)/60))

((s=${1}%60))

/usr/bin/printf "%02d:%02d:%02d\n" $h $m $s

}

lxdbackup() {

container=$1

folder=$2

snapshotname=$container-$timestamp.snapshot

backupname=lxd-image-$container-$timestamp

$lxc snapshot $container $snapshotname

$lxc publish --force $container/$snapshotname --alias $backupname

$lxc image export $backupname $folder$backupname

$lxc delete $container/$snapshotname

$lxc image delete $backupname

}

make_encrypted_container(){

name=$1

file=$2

mountpoint="$2/enc/"

size=$(du -s $file | awk '{print $1}')

if [ "$size" -lt "65536" ]; then

size=65536

else

size="$(($size + 65536))"

fi

crypt_filename=$hostname-$name-$timestamp$crypt_ext

crypt_mapper=$hostname-$name-$timestamp

crypt_devmapper="/dev/mapper/$crypt_mapper"

/usr/bin/fallocate -l "$size"KB $path$path_crypt$crypt_filename

/usr/bin/printf $encryption_passphrase | /sbin/cryptsetup luksFormat $path$path_crypt$crypt_filename -

/usr/bin/printf $encryption_passphrase | /sbin/cryptsetup luksOpen $path$path_crypt$crypt_filename $crypt_mapper

/sbin/mkfs -t ext4 $crypt_devmapper

/bin/mkdir -p $mountpoint

/bin/mount $crypt_devmapper $mountpoint

}

unmount_encrypted_container(){

name=$1

mountpoint="$2/enc/"

crypt_mapper=$hostname-$name-$timestamp

crypt_devmapper="/dev/mapper/$crypt_mapper"

/bin/umount $mountpoint

/sbin/cryptsetup luksClose $crypt_mapper

}

host_files=("/root/.bashrc" "/root/.bash_profile" "/root/.bash_history" "/root/.tmux.conf" "/root/.local/share/fish" "/root/scripts" "/etc/wireguard" "/etc/logrotate.d" "/etc/profile" "/etc/netdata" "/etc/fish" "/etc/fail2ban" "/etc/ssh" "/etc/sysctl.conf" "/etc/cron.d" "/etc/cron.daily" "/etc/cron.weekly" "/etc/cron.hourly" "/etc/cron.deny" "/etc/crontab" "/var/spool/cron" "/etc/sysconfig" "/etc/fstab" "/etc/crypttab" "/etc/postfix" "/etc/hosts" "/etc/resolv.conf" "/etc/aliases" "/etc/rsyslog.d" "/etc/ufw" "/etc/pam.d" "/etc/netplan" "/etc/wpa_supplicant" "/etc/network" "/etc/networks" "/etc/apt" "/etc/apticron" "/etc/yum.repos.d")

core=("nginx mariadb mail")

logname=backup-$hostname-$timestamp.log

log=$path$logname

if [[ "$make_encrypted" == 1 ]]; then

find $path$path_crypt -maxdepth 1 -name "*$crypt_ext" -type f -mtime +$days -print -delete >> $log

fi

find $path_host -maxdepth 1 -name "*.zip" -type f -mtime +$days -print -delete >> $log

find $path_host -maxdepth 1 -name "*.log" -type f -mtime +$days -print -delete >> $log

find $path_lxd_core -maxdepth 1 -name "*.tar.gz" -type f -mtime +$days -print -delete >> $log

START_TIME=$(date +%s)

echo "Backup:: Script start -- $timestamp" >> $log

echo "Backup:: Host: $hostname -- Date: $timestamp" >> $log

echo "Backup:: Backing up the following host files to $path_host" >> $log

echo $host_files >> $log

for host_file in ${host_files[@]}; do

echo "Backup:: Starting backup of $host_file to $path_host" >> $log

host_file_safe=$(echo $host_file | sed 's|/|-|g')

if [[ "$run" == 1 ]]; then

zip -r $path_host$host_file_safe-$timestamp.zip "$host_file" >> $log

fi

done

echo "Backup:: Host files successfully backed up" >> $log

if [[ "$run" == 1 ]]; then

if [[ "$make_encrypted" == 1 ]]; then

echo "Backup:: Making an encrypted container for host files" >> $log

make_encrypted_container "host" $path_host

echo "Backup:: Moving files to encrypted container" >> $log

/bin/mv $path_host/*.zip "$path_host/enc/"

echo "Backup:: Unmounting encrypted container" >> $log

unmount_encrypted_container "host" $path_host

/bin/rm -rf $path_host

echo "Backup:: Successfully encrypted host backup" >> $log

fi

fi

echo "Backup:: Backing up containers" >> $log

for container in ${core[@]}; do

echo "Backup:: Starting backup on $container to $path_lxd_core" >> $log

if [[ "$run" == 1 ]]; then

lxdbackup $container $path_lxd_core $ >> $log

/bin/sleep $wait

fi

done

if [[ "$run" == 1 ]]; then

if [[ "$make_encrypted" == 1 ]]; then

echo "Backup:: Making an encrypted container for core containers" >> $log

make_encrypted_container "core" $path_lxd_core

echo "Backup:: Moving files to encrypted container" >> $log

/bin/mv $path_lxd_core/*.tar.gz "$path_lxd_core/enc/"

echo "Backup:: Unmounting encrypted container" >> $log

unmount_encrypted_container "core" $path_lxd_core

/bin/rm -rf $path_lxd_core

echo "Backup:: Successfully encrypted core container backup" >> $log

fi

/bin/sleep $wait

fi

END_TIME=$(date +%s)

elapsed_time=$(( $END_TIME - $START_TIME ))

echo "Backup :: Script End -- $(date +%Y%m%d_%H%M)" >> $log

echo "Elapsed Time :: $(convertsecs $elapsed_time) " >> $log

backup_size=`find $path -maxdepth 5 -type f -mmin -360 -exec du -ch {} + | grep total$ | awk '{print $1}'`

backup_stored=`find $path -maxdepth 5 -type f -exec du -ch {} + | grep total$ | awk '{print $1}'`

disk_remaining=`df -Ph $path | tail -1 | awk '{print $4}'`

echo -e "Subject: [$hostname] Backup Finished [$backup_size] [stored: $backup_stored | disk remaining: $disk_remaining] (took $(convertsecs $elapsed_time))\n\n$(cat $log)" > $log

if [[ "$mail" == 1 ]]; then

/usr/sbin/sendmail -v $mailto < $log

fi

githubrepos

Periodically make a HTML page of your GitHub repos (requires GitHub cli tools (gh command)).

import json, humanize, os, html

from datetime import datetime, timedelta

file = '/home/admin/Repositories/Public/github.html'

github_json_file = '/home/admin/Resources/github.json'

def human_size(filesize):

return humanize.naturalsize(filesize)

def recent_date(unixtime):

dt = datetime.fromtimestamp(unixtime)

today = datetime.now()

today_start = datetime(today.year, today.month, today.day)

yesterday_start = datetime.now() - timedelta(days=1)

def day_in_this_week(date):

startday = datetime.now() - timedelta(days=today.weekday())

if(date >= startday):

return True

else:

return False

timeformat = '%b %d, %Y'

if day_in_this_week(dt):

timeformat = '%A at %H:%M'

if(dt >= yesterday_start):

timeformat = 'Yesterday at %H:%M'

if(dt >= today_start):

timeformat = 'Today at %H:%M'

return(dt.strftime(timeformat))

def shorten_text(s, n):

if len(s) <= n:

return s

n_2 = int(n) / 2 - 3

n_1 = n - n_2 - 3

return '{0}...{1}'.format(s[:int(n_1)], s[-int(n_2):])

cmd = 'gh repo list researcx -L 400 --visibility public --json name,owner,pushedAt,isFork,diskUsage,description > github.json'

repo_list = ""

last_updated = ""

i = 0

with open(github_json_file, 'r') as gh_repos:

repos = json.load(gh_repos)

for repo in repos:

i = 1 if i == 0 else 0

iso_date = datetime.strptime(repo['pushedAt'], '%Y-%m-%dT%H:%M:%SZ')

timestamp = int((iso_date - datetime(1970, 1, 1)).total_seconds())

date_time = recent_date(timestamp)

str_repo = str(repo['name'])

path_repo = str(repo['owner']['login']) + "/" + html.escape(str(repo['name']))

str_desc = "<br/><span class='description' title='" + html.escape(str(repo['description'])) + "'>" + shorten_text(html.escape(str(repo['description'])), 150) + "</span>"

link_repo = '<a href="https://github.com/'+path_repo+'" class="user" target="_blank">'+str(repo['owner']['login']) + "/"+'</a><a href="https://github.com/'+path_repo+'" target="_blank">'+str_repo+'</a>'

disk_usage = " " + human_size(repo['diskUsage'] * 1024) + " "

is_fork = "<span title='Fork'>[F]</span> " if repo['isFork'] == True else ""

repo_list += """

<div class="list v"""+str(i)+""""><span style="float:right;">""" + is_fork + disk_usage + str(date_time) + """</span>""" + link_repo + str_desc + """</div>"""

if last_updated == "":

last_updated = timestamp

html = """

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<meta http-equiv="X-UA-Compatible" content="ie=edge">

<title>repositories</title>

<style>

body, html {

padding: 0px;

margin: 0px;

color: #dc7224;

font-family: JetBrainsMono, Consolas, "Helvetica Neue", Helvetica, Arial, sans-serif

}

a {

color: #dc7224 !important;

text-decoration: none;

}

a:hover {

color: #efefef !important;

tect-decoration: underline;

}

a.user {

color: #aa7735 !important;

text-decoration: none;

}

span {

font-size: 14pt;

margin-top: 2px;

color: #555;

}

.list {

font-size: 16pt;

padding: 4px 8px;

border-bottom: 1px dashed #101010;

background: #000;

}

.list.v0 {

background: #0f0f0f;

}

.list.v1 {

background: #121212;

}

.description{

font-size: 10pt;

color: #555;

}

</style>

</head>

<body>"""+repo_list+"""

<div class="list" style="text-align: right;">

<span style="text-style: italic; font-size: 9pt;">generated by <a href="https://researcx.gitlab.io/#d97c874b">githubrepos.py</a></span>

</div>

</body>

</html>

"""

f = open(file, "w+")

f.write(html)

f.close()

os.utime(file, (last_updated, last_updated))

researcx/ss13-lawgen [Content-Warning]

Space Station 13 AI Law Generator (Browser Version).

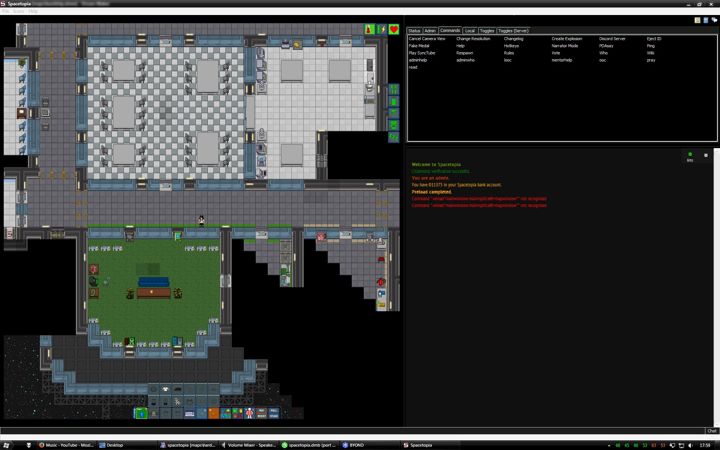

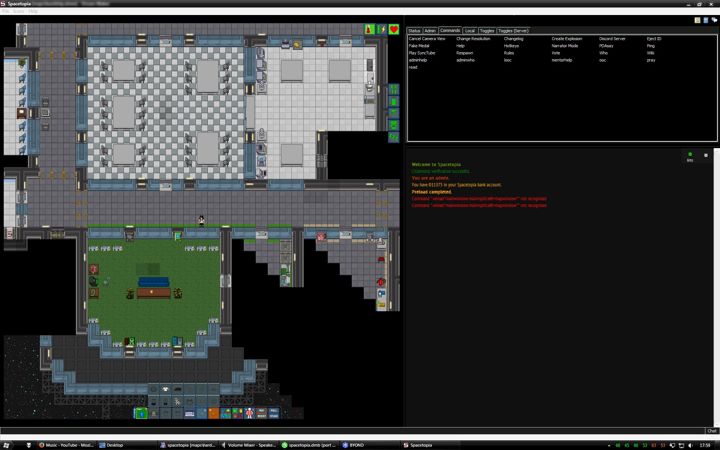

researcx/spacetopia (Spacetopia)

A role-play oriented Space Station 13 mod which adds certain Second-Life features and character customisation amongst other changes that are highly inspired by EVE Online and Furcadia.

Features:

- A marketplace where users can submit various types of clothing, character details and miscellaneous other vanity items.

- Users can buy things from the marketplace to apply to their character, items can also be gifted to other users.

- Extensive character customization with the ability to change your skin tone and color, the detail and color of body parts and the ability to change the style of your body.

- All outfits/uniforms are separated into tops, bottoms, underwear and socks (with inventory slots for each) for better customization.

- Clothing selection using clothes from the marketplace is available on the character preference screen.

- Customizable personal housing with item/storage persistency and the ability to change your furniture/decor.

- Station doesn't require large amounts of maintenance thus making it perfect for semi-serious and serious roleplay.

- In-game integration with on-site Spacetopia currency.

- New game and web UI.

- Ability to set up extra information/a character sheet.

- Ability to change resolution/view distance

- Character preview can be rotated by clicking on it.

- Players can decide whether to spawn with a satchel or a backpack.

- Improved sprites for floors, air alarms, APC's and ATMs.

- More nature sprites.

- Gender is now a text field.

- Closets spawn with parts of clothing rather than uniforms.

- PvP toggle and PvP-only areas (such as exploratory).

- No permadeath.

- Players spawn as a civilian by default and can choose an occupation later in-game using a computer.

- Many sprites, optimizations, fixes and features ported from D2Station.

- Major improvements upon the API system written for D2Station V4.

More images...

weatherscript

Adjust cpu frequency based on time and weather. (For solar panels.)

LAT=53.3963308

LON=-1.5155923

JSON=$(curl -s "https://api.open-meteo.com/v1/forecast?latitude=$LAT&longitude=$LON¤t_weather=true")

WEATHERCODE=$(echo $JSON | jq -r '.current_weather.weathercode')

IS_DAY=$(echo $JSON | jq -r '.current_weather.is_day')

if [ "$IS_DAY" -eq 1 ]; then

echo -e "\033[1;32mSUCC: It is daytime!\033[0m"

if [ "$WEATHERCODE" -gt 2 ]; then

echo -e "\033[1;31mERR: Unsuitable weather conditions (dark)\033[0m"

x86_energy_perf_policy --turbo-enable 0; cpupower frequency-set -u 1.5GHz > /dev/null

else

echo -e "\033[1;32mSUCC: Suitable weather conditions (bright)\033[0m"

x86_energy_perf_policy --turbo-enable 1; cpupower frequency-set -u 5GHz > /dev/null

fi

else

echo -e "\033[1;31mERR: It is not daytime!\033[0m"

x86_energy_perf_policy --turbo-enable 0; cpupower frequency-set -u 1GHz > /dev/null

fi

discord_export_friends

Python script to backup discord tags with user IDs. (For friend lists.)

import discord

token = ("")

class ExportFriends(discord.Client):

async def on_connect(self):

friendslist = client.user.friends

for user in friendslist:

try:

print(user.name+"#"+user.discriminator + " ("+str(user.id)+")")

except:

print(user.name+"#"+user.discriminator + " ("+str(user.id)+")")

client = ExportFriends()

client.run(token, bot = False)

Square avatar generator for Twitter.

From noiob/noiob.github.io/main/hexagon.html

researcx/timg

Self-destructing image upload server in Python+Flask. Image is loaded in base64 in the browser and destroyed as soon as it is viewed (experimental).

DonateBot

Game-server style "donate to us" announcer bot for IRC channels.

from twisted.words.protocols import irc

from twisted.internet import reactor, protocol

from re import search, IGNORECASE

from random import randint

import time

import os, signal

serv_ip = "10.3.0.50"

serv_port = 6667

with open('/root/DonateBot/donate.txt') as f:

message = f.read()

class DonateBot(irc.IRCClient):

nickname = "DonateBot"

chatroom = "#xch"

def signedOn(self):

self.join(self.chatroom)

time.sleep(2)

self.msg(self.chatroom, message)

self.part(self.chatroom)

time.sleep(2)

self.quit()

def quit(self, message=""):

self.sendLine("QUIT :%s" % message)

def main():

f = protocol.ReconnectingClientFactory()

f.protocol = DonateBot

reactor.connectTCP(serv_ip, serv_port, f)

reactor.run()

if __name__ == "__main__":

main()

Adds server password and channel-specific trigger word support to the IRC module.

From jrabbit/pyborg-1up

A multi-network multi-channel IRC relay. Acts as a soft-link between IRC servers.

- Allows you to configure more than two networks with more than two channels for messages to be relayed between.

- Records users on every configured channel on every configured network.

- Remembers all invited users with a rank which the bots will automatically attempt to promote them to.

- Provides useful commands for administrators to manage their conduit-linked servers with.

- When used with the Matrix IRC AppService, filters Matrix and Discord nicks and messages for clarity.

A weechat config exporter which includes non-default variables. Outputs to /set commands, myweechat.md-style markdown or raw config.

try:

import weechat as w

except Exception:

print("This script must be run under WeeChat.")

print("Get WeeChat now at: https://weechat.org")

quit()

from os.path import exists

SCRIPT_NAME = "confsave"

SCRIPT_AUTHOR = "researcx <https://linktr.ee/researcx>"

SCRIPT_LINK = "https://github.com/researcx/weechat-confsave"

SCRIPT_VERSION = "0.1"

SCRIPT_LICENSE = "WTFPL"

SCRIPT_DESC = "Save non-default config variables to a file in various formats."

SCRIPT_COMMAND = SCRIPT_NAME

if w.register(SCRIPT_NAME, SCRIPT_AUTHOR, SCRIPT_VERSION, SCRIPT_LICENSE, SCRIPT_DESC, "", ""):

w.hook_command(SCRIPT_COMMAND,

SCRIPT_DESC + "\nnote: will attempt to exclude plaintext passwords.",

"[filename] [format]",

" filename: target file (must not exist)\n format: raw, markdown or commands\n",

"%f",

"confsave_cmd",

'')

def confsave_cmd(data, buffer, args):

args = args.split(" ")

filename_raw = args[0]

output_format = args[1]

acceptable_formats = ["raw", "markdown", "commands"]

output = ""

currentheader = ""

lastheader = ""

if not filename_raw:

w.prnt('', 'Error: filename not specified!')

w.command('', '/help %s' %SCRIPT_COMMAND)

return w.WEECHAT_RC_OK

if output_format not in acceptable_formats:

w.prnt('', 'Error: format incorrect or not specified!')

w.command('', '/help %s' %SCRIPT_COMMAND)

return w.WEECHAT_RC_OK

filename = w.string_eval_path_home(filename_raw, {}, {}, {})

infolist = w.infolist_get("option", "", "")

variable_dict = {}

if infolist:

while w.infolist_next(infolist):

infolist_name = w.infolist_string(infolist, "full_name")

infolist_default = w.infolist_string(infolist, "default_value")

infolist_value = w.infolist_string(infolist, "value")

infolist_type = w.infolist_string(infolist, "type")

if infolist_value != infolist_default:

variable_dict[infolist_name] = {}

variable_dict[infolist_name]['main'] = infolist_name.split(".")[0]

variable_dict[infolist_name]['name'] = infolist_name

variable_dict[infolist_name]['value'] = infolist_value

variable_dict[infolist_name]['type'] = infolist_type

w.infolist_free(infolist)

if output_format == "markdown":

output += "## weechat configuration"

output += "\n*automatically generated using [" + SCRIPT_NAME + ".py](" + SCRIPT_LINK + ")*"

for config in variable_dict.values():

if output_format == "markdown":

currentheader = config['main']

if not ("password" in config['name']) and ("sec.data" not in config['value']):

if currentheader != lastheader:

output += "\n### " + config['main']

lastheader = currentheader

if not ("password" in config['name']) and ("sec.data" not in config['value']):

write_name = config['name']

if config['type'] == "string":

write_value = "\"" + config['value'] + "\""

else:

write_value = config['value']

if output_format == "markdown":

output += "\n\t/set " + write_name + " " + write_value

if output_format == "raw":

output += "\n" + write_name + " = " + write_value

if output_format == "commands":

output += "\n/set " + write_name + " " + write_value

output += "\n"

if exists(filename):

w.prnt('', 'Error: target file already exists!')

return w.WEECHAT_RC_OK

try:

fp = open(filename, 'w')

except:

w.prnt('', 'Error writing to target file!')

return w.WEECHAT_RC_OK

fp.write(output)

w.prnt("", "\nSuccessfully outputted to " + filename + " as " + output_format + "!")

fp.close()

return w.WEECHAT_RC_OK

GitLab • GitHub

LXD powertool for container mass-management, migration and automation.

Fetch a random fursona from thisfursonadoesnotexist.com

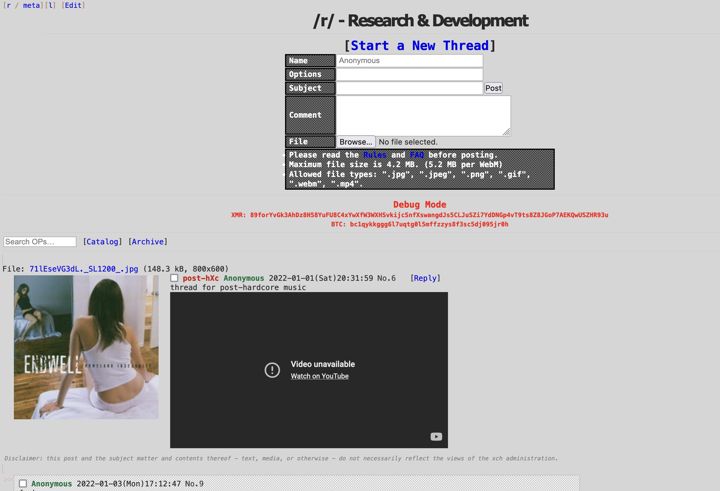

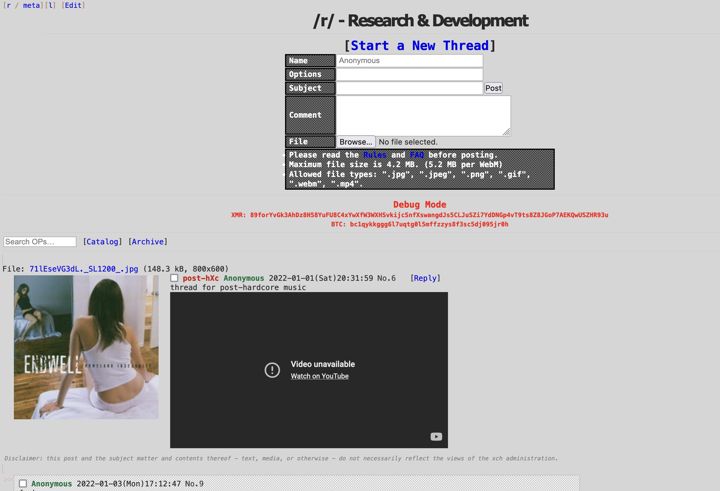

researcx/xch (incomplete)

Imageboard software.

Feature List

"infinity" imageboard script fork with fixes, instructions for modern system installation, basic recent threads functionality and some added missing files.

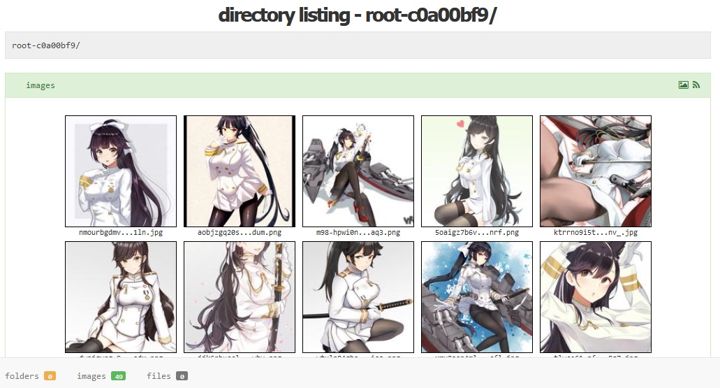

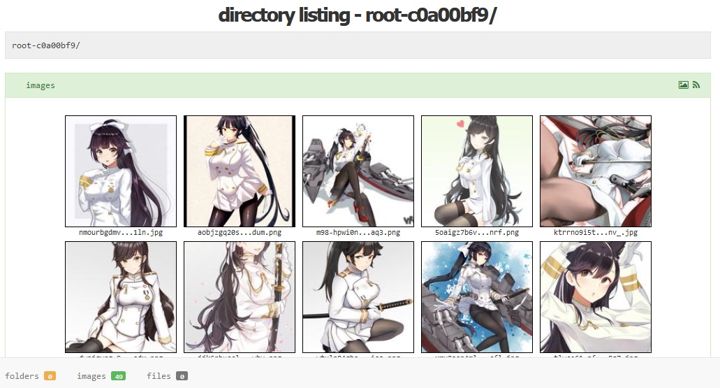

Directory listing script based on SPKZ's + Garry's Directory Listing.

Features:

- Lists all folders as-is without the need for a database.

- Automatic thumbnail generation for images and videos (150x150, 720x, 1600x)

- Embedded images, animation (.gif) and videos

- Toggle for image grid and gallery view

- RSS feeds for entire site and individual folders

- 18+ notices for folders marked as NSFW

- Ability to add hidden folders

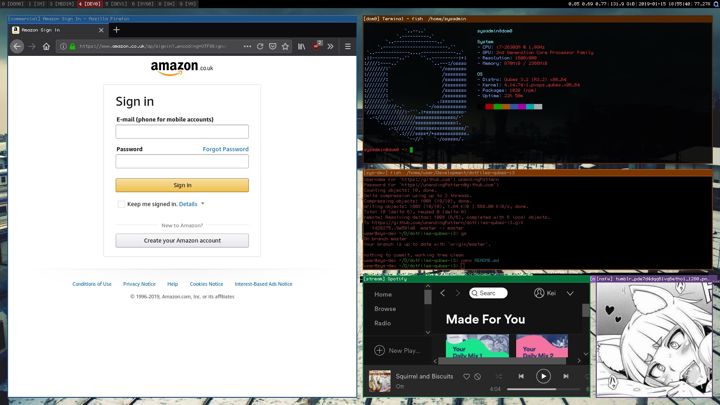

i3-gaps patch for qubes 3.2.

researchdepartment/dotfiles-qubes3.2-i3 (get the full dotfiles for the above setup here)

From SietsevanderMolen/i3-qubes

Fork of the goscraper webpage-scraper which adds timeout, proxy and user-agent support.

From badoux/goscraper.

void-linux-install

Minimal instructions for installing Void Linux on MBR + Legacy BIOS.

bash

loadkeys uk

wpa_passphrase <MYSSID> <key> >> /etc/wpa_supplicant/wpa_supplicant.conf

wpa_supplicant -i <device> -c /etc/wpa_supplicant/wpa_supplicant.conf -B

parted /dev/sdX mklabel msdos

cfdisk /dev/sdX

mkfs.ext2 /dev/sdX1

cryptsetup luksFormat --type luks2 --cipher aes-xts-plain64 --key-size 512 --hash sha256 /dev/sdX2

cryptsetup luksOpen /dev/sdX2 sysroot

pvcreate /dev/mapper/sysroot

vgcreate void /dev/mapper/sysroot

lvcreate --size 2G void --name swap

lvcreate -l +100%FREE void --name root

mkfs.xfs -i sparse=0 /dev/mapper/void-root

mkswap /dev/mapper/void-swap

mount /dev/mapper/void-root /mnt

swapon /dev/mapper/void-swap

mkdir /mnt/boot

mount /dev/sdX1 /mnt/boot

for i in /dev /dev/pts /proc /sys /run; do sudo mount -B $i /mnt$i; done

xbps-install -Sy -R https://mirrors.dotsrc.org/voidlinux/current -r /mnt base-system lvm2 cryptsetup grub nano htop tmux

chroot /mnt

bash

chown root:root /

chmod 755 /

passwd root

useradd -m -s /bin/bash -U -G wheel,users,audio,video,cdrom,input MYUSERNAME

passwd MYUSERNAME

nano /etc/sudoers

nano /etc/rc.conf

echo somehostname > /etc/hostname

echo "LANG=en_US.UTF-8" > /etc/locale.conf

echo "en_US.UTF-8 UTF-8" >> /etc/default/libc-locales

xbps-reconfigure -f glibc-locales

BOOT_UUID=$(blkid -o value -s UUID /dev/sdX1)

CRYPTD_UUID=$(blkid -o value -s UUID /dev/sdX1)

nano /etc/fstab

echo "UUID=${BOOT_UUID} /boot ext2 defaults 0 2"

echo "GRUB_CMDLINE_LINUX_DEFAULT=\"loglevel=4 slub_debug=P page_poison=1 acpi.ec_no_wakeup=1 rd.auto=1 cryptdevice=UUID=${CRYPTD_UUID}:sysroot root=/dev/mapper/void-root resume=/dev/mapper/void-swap\"" >> /etc/default/grub

echo "GRUB_ENABLE_CRYPTODISK=y" >> /etc/default/grub

grub-install /dev/sdX

xbps-reconfigure -f linux4.19

cp /etc/wpa_supplicant/wpa_supplicant.conf /mnt/etc/wpa_supplicant/wpa_supplicant.conf

exit

umount -R /mnt

reboot

cryptsetup luksOpen /dev/sdX2 sysroot

vgchange -a y void

mount /dev/mapper/void-root /mnt

mount /dev/sdX1 /mnt/boot

for i in /dev /dev/pts /proc /sys /run; do sudo mount -B $i /mnt$i; done

chroot /mnt

bash

arch-linux-install

Minimal instructions for installing Arch Linux on GPT or MBR on UEFI or Legacy BIOS.

sudo dd bs=4M if=archlinux-2019.01.01-x86_64.iso of=/dev/sdb status=progress oflag=sync

loadkeys uk

parted /dev/sdX mklabel msdos

cfdisk /dev/sdX

cgdisk /dev/sdX

mkfs.ext2 /dev/sdX1

mkfs.vfat -F32 /dev/sdX1

mkfs.ext2 /dev/sdX2

cryptsetup luksFormat --type luks2 --cipher aes-xts-plain64 --key-size 512 --hash sha256 /dev/sdX3

cryptsetup luksOpen /dev/sdX3 sysroot

pvcreate /dev/mapper/sysroot

vgcreate arch /dev/mapper/sysroot

lvcreate --size 2G arch --name swap

lvcreate -l +100%FREE arch --name root

mkfs.xfs /dev/mapper/arch-root

mkswap /dev/mapper/arch-swap

mount /dev/mapper/arch-root /mnt

swapon /dev/mapper/arch-swap

mkdir /mnt/boot

mount /dev/sdX1 /mnt/boot

mount /dev/sdX2 /mnt/boot

mkdir /mnt/boot/efi

mount /dev/sdX1 /mnt/boot/efi

wifi-menu

pacstrap /mnt base base-devel fish nano vim git efibootmgr grub grub-efi-x86_64 dialog wpa_supplicant lsb-release

genfstab -pU /mnt >> /mnt/etc/fstab

nano /mnt/etc/fstab

arch-chroot /mnt /bin/fish

ln -sf /usr/share/zoneinfo/Europe/Jersey /etc/localtime

hwclock --systohc --utc

echo somehostname > /etc/hostname

nano /etc/locale.gen

echo LANG=en_US.UTF-8 >> /etc/locale.conf

locale-gen

passwd

useradd -m -g users -G wheel -s /bin/fish MYUSERNAME

passwd MYUSERNAME

nano /etc/sudoers

nano /etc/mkinitcpio.conf

grub-install --target=i386-pc /dev/sdX

exit

mkdir /mnt/hostlvm

mount --bind /run/lvm /mnt/hostlvm

arch-chroot /mnt /bin/fish

ln -s /hostlvm /run/lvm

nano /etc/default/grub

grub-mkconfig -o /boot/grub/grub.cfg

grub-install --target=x86_64-efi --efi-directory=/boot/efi --bootloader-id=ArchLinux

nano /etc/default/grub

bootctl --path=/boot/efi install

nano /boot/efi/loader/entries/arch.conf

mkinitcpio -p linux

exit

umount -R /mnt

swapoff -a

reboot

cryptsetup luksOpen /dev/sdX3 sysroot

mount /dev/mapper/arch-root /mnt

mount /dev/sdX1 /mnt/boot

mount /dev/sdX2 /mnt/boot

mount /dev/sdX1 /mnt/boot/efi

arch-chroot /mnt /bin/fish

Oragono IRCd mod providing imageboard features and anonymity to IRC.

Warning: Highly experimental!

Features and ideas of this fork:

- Tripcode and secure tripcode system (set password (/pass) to #tripcode, #tripcode#securetripcode or ##securetripcode to use) (90%)

- Auditorium mode (+u) (inspircd style) (90%)

- Greentext and basic ~markdown-to-irc~ formatting support (20%)

- Channel mode for displaying link titles and description (+U) (99%)

- Channel mode for group highlights per word basis (

+H <string>) (i.e. /mode #channel +H everyone; /msg hey @everyone) (90%) (Add cooldown system (0%))

- Private queries/whitelist mode (+P) which requires both users to be mutual contacts to use private messaging. (10%)

- Automatically generated and randomized join/quit (quake kill/death style?) messages (0%)

- Server statistics (join/quit/kick/ban counter, lines/words spoken) (0%)

- Simple federation (the irc will work over DHT or a similar system to be provided worldwide for anyone to be able to use, anyone should be able to host a server that connects to it with little to no knowledge required) (0%)

- Build in a webrtc voice/video chat server and make (or modify an open source) webclient to support voice and video chatting (0%)

- Web front-end for chat with trip authentication with discord-style themes, avatar support, automatically hosted for every server/client (0%)

- Anonymity changes (reduced whois info, removed whowas info, completely hide or obfuscate ips/hostmasks, make users without tripcode completely anon and unidentifiable) (60%)

From ergochat/ergo

My personal weechat configuration.

Added user count and user list API call to znc-httpadmin.

From prawnsalad/znc-httpadmin

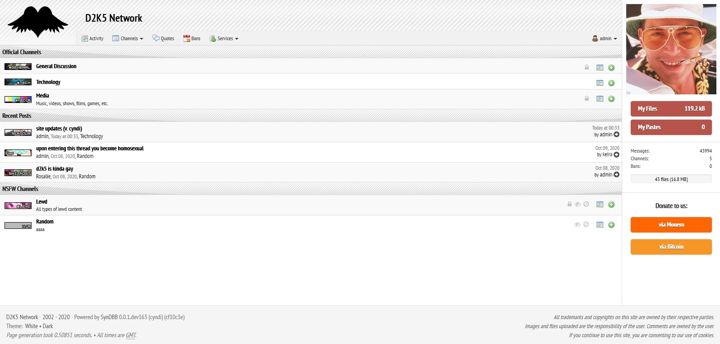

researcx/SynDBB (Cyndi)

An IRC, imageboard, Facepunch and SomethingAwful inspired forum software.

Hybridization of different aspects of classic internet forums, imageboards, and IRC.

Features:

- File uploader with external upload support.

- Anonymous file uploader.

- Automatic Exif data removal on uploaded images.

- Deleted files securely wiped using "shred".

- File listing with file info and thumbnails.

- Temporary personal image galleries.

- Custom user-created channels.

- List and grid (catalog) view modes for channels.

- List and gallery view modes for threads.

- Rating system for threads, quotes and IRC.

- Site/IRC integration API.

- Avatar history with the ability to re-use avatars without uploading them.

- Custom emoticon submission (admin approval required).

- QDB style quote database for IRC quotes (quotes are admin approved).

- Simple pastebin.

- Improved site and IRC API.

- LDAP Authentication support (+ automatic migration)

- JSON based configuration file.

- Most aspects of the site configurable in config.json.

- Display names (+ display name generator).

- Username generator.

- Summary cards for user profiles.

- Profile and user tags.

- NSFW profile toggle.

- Tall avatar support (original avatar source image is used) for profiles (all members) and posts (donators).

- Various configuration options for custom channels (access control, moderator list, NSFW toggle, anon posting toggle, imageboard toggle, etc)

- Channel and thread info displayed on sidebar.

- User flairs.

- Multi-user/profile support (accounts can be linked together and switched between with ease).

- Mobile layout.

- Theme selector.

- All scripts and styles hosted locally.

- Scripts for importing posts from imageboards and RSS feeds.

More images...

file_download.py (unavailable)

Automated per-channel/server/buffer/query link/file archiver script for weechat (async).

fp-ban (unavailable)

Evercookie based fingerprinting + user ban system. Previously used on the Space Station 13 server.

dnsbl

IRC DNSBL style user/bot blocking in PHP.

<?php

function CheckIfSpambot($emailAddress, $ipAddress, $userName, $debug = false)

{

$spambot = false;

$errorDetected = false;

if ($emailAddress != "")

{

$xml_string = file_get_contents("http://www.stopforumspam.com/api?email=" . urlencode($emailAddress));

$xml = new SimpleXMLElement($xml_string);

if ($xml->appears == "yes")

{

$spambot = true;

}

elseif ($xml->appears == "no")

{

$spambot = false;

}

else

{

$errorDetected = true;

}

}

if ($spambot != true && $ipAddress != "")

{

$xml_string = file_get_contents("http://www.stopforumspam.com/api?ip=" . urlencode($ipAddress));

$xml = new SimpleXMLElement($xml_string);

if ($xml->appears == "yes")

{

$spambot = true;

}

elseif ($xml->appears == "no")

{

$spambot = false;

}

else

{

$errorDetected = true;

}

}

if ($spambot != true && $userName != "")

{

$xml_string = file_get_contents("http://www.stopforumspam.com/api?username=" . urlencode($userName));

$xml = new SimpleXMLElement($xml_string);

if ($xml->appears == "yes")

{

$spambot = true;

}

elseif ($xml->appears == "no")

{

$spambot = false;

}

else

{

$errorDetected = true;

}

}

if ($debug == true)

{

return $errorDetected;

}

else

{

return $spambot;

}

}

function ReverseIPOctets($inputip){

$ipoc = explode(".",$inputip);

return $ipoc[3].".".$ipoc[2].".".$ipoc[1].".".$ipoc[0];

}

function IsTorExitPoint($ip){

if (gethostbyname(ReverseIPOctets($ip).".".$_SERVER['SERVER_PORT'].".".ReverseIPOctets($_SERVER['SERVER_ADDR']).".ip-port.exitlist.torproject.org")=="127.0.0.2") {

return true;

} else {

return false;

}

}

function checkbl($ip){

$blacklisted = 0;

$whitelist = array('');

$blacklist = array('');

$range_blacklist = array('');

$city_blacklist = array('');

$region_blacklist = array('');

$geoip = geoip_record_by_name($ip);

$mask=ip2long("255.255.255.0");

$remote=ip2long($ip);

if (IsTorExitPoint($ip)) {

$blacklisted = 1;

}

if (CheckIfSpambot('', $ip, '')){

$blacklisted = 1;

}

foreach($range_blacklist as $single_range){

if (($remote & $mask)==ip2long($single_range))

{

$blacklisted = 1;

}

}

foreach($city_blacklist as $city){

if ($geoip['city'] == $city)

{

$blacklisted = 1;

}

}

foreach($region_blacklist as $region){

if ($geoip['region'] == $region)

{

$blacklisted = 1;

}

}

if (in_array($ip, $blacklist)) {

$blacklisted = 1;

}

if($blacklisted && !in_array($ip, $whitelist)){

return 1;

}else{

return 0;

}

}

if(isset($_REQUEST['ip'])){

echo checkbl($_REQUEST['ip']);

}else{

echo checkbl($_SERVER['REMOTE_ADDR']);

}

?>

simple_bash_uploader

Bash file/screenshot upload script (scrot compatible)

#!/bin/sh

sleep 0.1

export DISPLAY=:0.0

UPLOAD_SERVICE="My Awesome Server"

RANDOM_FILENAME=$(cat /dev/urandom | tr -dc 'a-zA-Z0-9' | fold -w 32 | head -n 1)

IMAGE_PATH="/home/$USER/Screenshots/$RANDOM_FILENAME.png"

REMOTE_USER="test"

REMOTE_SSH_AUTH="~/.ssh/my_ssh_key"

REMOTE_SERVER="example.org"

REMOTE_PORT="22"

REMOTE_PATH="/var/www/html/files/"

REMOTE_URL="https://example.org/files/"

if [ "$1" == "full" ]; then

MODE=""

elif [ "$1" == "active" ]; then

MODE="-u"

elif [ "$1" == "selection" ]; then

MODE="-s"

else

FILE_PATH=$1

FILE_NAME=$(basename $FILE_PATH)

FILE_EXT=".${FILE_NAME##*.}"

notify-send "$UPLOAD_SERVICE" "Upload of file '$RANDOM_FILENAME$FILE_EXT' started."

scp -i $REMOTE_SSH_AUTH -P $REMOTE_PORT $FILE_PATH $REMOTE_USER@$REMOTE_SERVER:$REMOTE_PATH$RANDOM_FILENAME$FILE_EXT

if [ $? -eq 0 ];

then

echo -n $REMOTE_URL$RANDOM_FILENAME$FILE_EXT|xclip -sel clip

notify-send "$UPLOAD_SERVICE" $REMOTE_URL$RANDOM_FILENAME$FILE_EXT

else

notify-send "$UPLOAD_SERVICE" "Upload failed!"

fi

exit

fi

scrot $MODE -z $IMAGE_PATH || exit

notify-send "$UPLOAD_SERVICE" "Upload of screenshot '$RANDOM_FILENAME.png' started."

scp -i $REMOTE_SSH_AUTH -P $REMOTE_PORT $IMAGE_PATH $REMOTE_USER@$REMOTE_SERVER:$REMOTE_PATH

if [ $? -eq 0 ];

then

echo -n "$REMOTE_URL$RANDOM_FILENAME.png"|xclip -sel clip

notify-send "$UPLOAD_SERVICE" "$REMOTE_URL$RANDOM_FILENAME.png"

else

notify-send "$UPLOAD_SERVICE" "Upload failed!"

fi

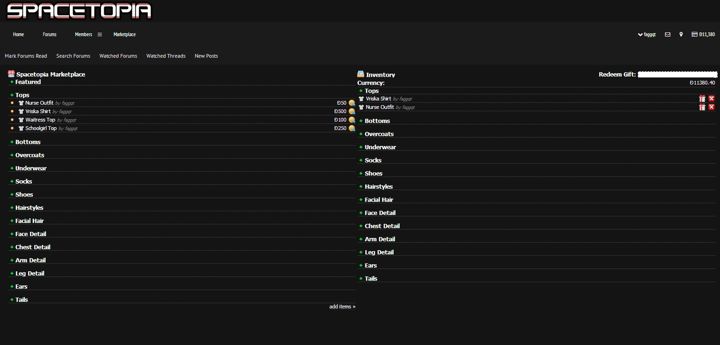

Spacetopia Marketplace

Based on my XenForo forum shop system. Adds extra features for BYOND and Spacetopia integration.

Additional features:

- Support for BYOND sprite files

- Allows user submitted content.

Shop/Market System

A simple shop system, later made modular and given an internal API to make it able to work with any forum or CMS software.

Features:

- Ability to buy items using real money or on-site currency.

- Users can buy on-site currency with real money.

- Users can submit their own items.

- User accounts which serve as banks.

- Automatically calculated item pricing (inflation) based on bank accounts' currency and item ownership.

- Rating and flagging system for items.

- Items have a redeem code for gifting or giveaways.

- An inventory which displays each item you own.

- Items can be either used, activated or downloaded depending on their type.

Smartness Points

Highlights bad grammar and misspellings as red, reduces points for each mistake as a disciplinary action. Based on the Facepunch Studios smartness system from around 2004-2005.

Features:

- Supports a customizable list of words, thus can be used for more than just grammar/spelling mistakes.

- Users will lose a point for each bad word.

- By correcting a message, the user will gain back any points lost.

Imageboard style warn/ban notices

Appends "USER WAS BANNED FOR THIS POST (REASON)" and/or "USER WAS WARNED FOR THIS POST (REASON)" at the bottom of the users' post.

SS13/BYOND API

Features:

- XenForo user profile info fetching system.

- Trophy (achievement) get and set system.

- BYOND ckey comparison using XenForo custom profile fields.

- Fetch clothing and character customization from user profile fields.

- Shop integration.

- bdBank integration.

Space Station 13 Linux Server Toolkit

#!/bin/sh

source ../byond/bin/byondsetup

cd `dirname $0`

isdefined=0

${1+ export isdefined=1}

if [ $isdefined == 0 ] ; then

echo "Space Station 13 Linux Server Toolkit"

echo "by researcx (https://github.com/researcx)"

echo "Parameters: start, stop, update, compile, version"

exit

fi

LONG=`git --git-dir=../space-station-13/.git rev-parse --verify HEAD`

SHORT=`git --git-dir=../space-station-13/.git rev-parse --verify --short HEAD`

VERSION=`git --git-dir=../space-station-13/.git shortlog | grep -E '^[ ]+\w+' | wc -l`

if [ $1 == "start" ]; then

DreamDaemon 'goonstation.dmb' -port 5200 -log serverlog.txt -invisible -safe &

elif [ $1 == "stop" ]; then

pkill DreamDaemon

elif [ $1 == "update" ]; then

echo 'Downloading latest content from .git'

git clone https://invalid.url.invalid/repo/space-station-13 ../space-station-13/

echo 'Checking for updates'

sh -c 'cd ../space-station-13/ && /usr/bin/git pull origin master'

LONG=`git --git-dir=../space-station-13/.git rev-parse --verify HEAD`

SHORT=`git --git-dir=../space-station-13/.git rev-parse --verify --short HEAD`

VERSION=`git --git-dir=../space-station-13/.git shortlog | grep -E '^[ ]+\w+' | wc -l`

curl "http://invalid.url.invalid/status/status.php?type=update&ver=$VERSION&rev=$LONG"

echo ''

elif [ $1 == "compile" ]; then

echo "Compiling Space Station 13 (Revision: $VERSION)"

TIME="$(sh -c "time DreamMaker ../space-station-13/goonstation.dme &> build.txt" 2>&1 | grep real)"

echo $TIME

LONG=`git --git-dir=../space-station-13/.git rev-parse --verify HEAD`

SHORT=`git --git-dir=../space-station-13/.git rev-parse --verify --short HEAD`

VERSION=`git --git-dir=../space-station-13/.git shortlog | grep -E '^[ ]+\w+' | wc -l`

BUILD="$(tail -1 build.txt)"

cp build.txt /usr/share/nginx/html/

curl "http://invalid.url.invalid/status/status.php?type=build&data=$BUILD&ver=$VERSION&time=$TIME&log=http://invalid.url.invalid/build.txt"

elif [ $1 == "version" ]; then

echo "Version Hash: $LONG ($SHORT)"

echo "Revision: $VERSION"

echo "Changes in this version: https://invalid.url.invalid/repo/space-station-13/commits/$LONG"

else

echo "exiting...";

fi

GitLab • GitHub

git-update

Git repository auto-updater

#!/bin/sh

REPO="https://github.com/<user>/<repo>"

PATH="/path/to/repository"

LATEST=`/usr/bin/git ls-remote $REPO refs/heads/master | /usr/bin/cut -f 1`

CURRENT=`/usr/bin/git -C $PATH rev-parse HEAD`

echo "Current Revision: $CURRENT"

echo "Latest Revision: $LATEST"

if [ "$LATEST" == "$CURRENT" ]; then

echo 'No updates found!'

exit

fi

/usr/bin/git -C $PATH pull

researcx/opensim-mod (currently unavailable)

OpenSimulator mods for XenForo bdBank currency and user integration.

More images...

scan_pics

Mass incremental+prefix+suffix photo scanner for direct URLs.

<?php

function zerofill($mStretch, $iLength = 2)

{

$sPrintfString = '%0' . (int)$iLength . 's';

return sprintf($sPrintfString, $mStretch);

}

if(isset($_GET['start'])){

$start = $_GET['start'];

}else{

$start = 0;

}

if(isset($_GET['end'])){

if($_GET['end'] <= 9999){

$end = $_GET['end'];

}else{

$end = 9999;

}

}else{

$end = 9999;

}

if(isset($_GET['url'])){

$url = $_GET['url'];

}else{

die('No parameters specified.');

}

if(isset($_GET['prefix'])){

$prefix = $_GET['prefix'];

}else{

$prefix = null;

}

if(isset($_GET['suffix'])){

$suffix = $_GET['suffix'];

}else{

$suffix = '.jpg';

}

echo '<title>Scanning images from '.$prefix.$start.$suffix.' to '.$prefix.$end.$suffix.'.</title>';

for($i=$start;$i<$end + 1;$i++){

echo '<img src="'.$url.$prefix.zerofill($i,strlen($end)).$suffix.'" width="100" height="100" />';

}

?>

Various Garry's Mod roleplay scripts + hud design.

Hit the giant enemy crab in its weak spot for massive damage.

Source modding

- City 47

- Texturing (custom map textures)

- Model editing (custom player-models)

Markov forum bot

Using a combined pyborg IRC database, takes the replies from a forum thread, adding them to its database and formulating a reply to the thread. Can also reply to individual posts. Triggered automatically at random, but also has a chance to reply when replied to or mentioned.

SMF forum mods:

- Neopets-style RP item shop

- Smartness

- Ban list, improved ban system

- Upload site with file listing, thumbnails, search, user to user file sharing/transfers

Instant-messaging system with an IRC backend. (unavailable)

ActiveWorlds City Builds

- Rockford

- Parameira

- D2City

Contact Me:

Support Me:

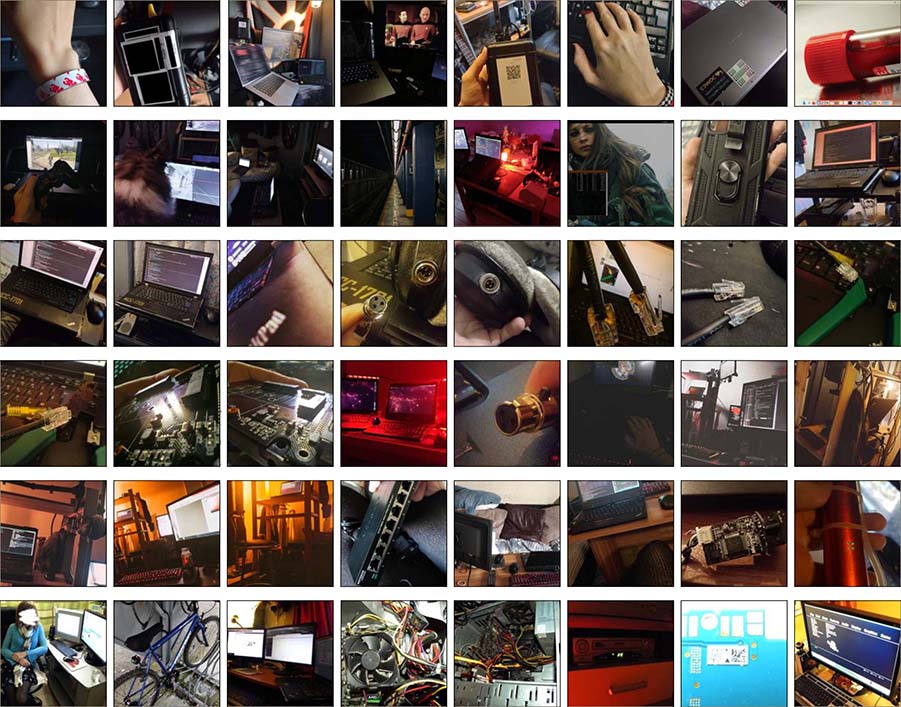

Image Galleries:

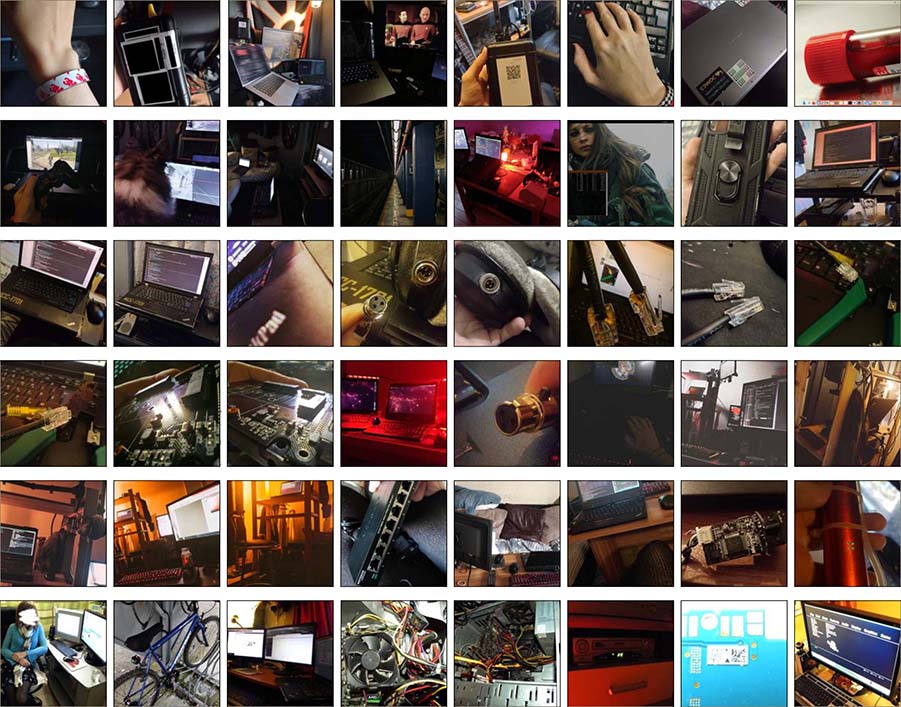

> directory listing - /Technology/

view more...

> directory listing - /Imagedump/

view more...

> directory listing - /Development/

view more...

> directory listing - /Cooking/

view more...

> directory listing - /Photos/

> directory listing - /Music/

> directory listing - /Documents/

> directory listing - /Food/

> directory listing - /Games/

"Halfway through reading a Hacker News thread I kick my boot into the computer. Even when it's an original thread I can't stand it. It feels good to smash the computer though. I feel like I'm participating in the discussion."